Hi Team,

We’re on the final steps of setting up GoodData.CN, using BigQuery as our data source.

Kindly help us on following :

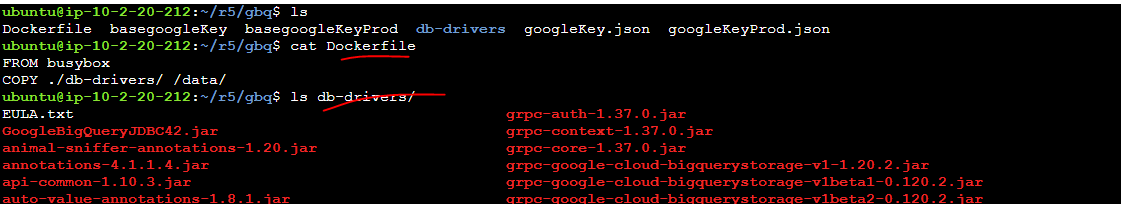

- I have built the gooddata cn extra drivers docker image along with service_account.json file included on db-drivers on and pushed our private custom ECR as <acc_id>.dkr.ecr.<region>.amazonaws.com/gooddata-cn-extra-drivers:latest as specifed as mentioned in this document link 1a. Need a clarification on setp 4 : --set sqlExecutor.extraDriversInitContainer=<custom-image-with-drivers>. <custom-image-with-drivers> means gooddata-cn-extra-drivers image URI string ?. OR 1b. Example Directly update the entry for sqlExecutor.extraDriversInitContainer in the helm chart options with the custom image. Need an example yaml reference snippet for customized-values-gooddata-cn.yaml how to include custom drivers .

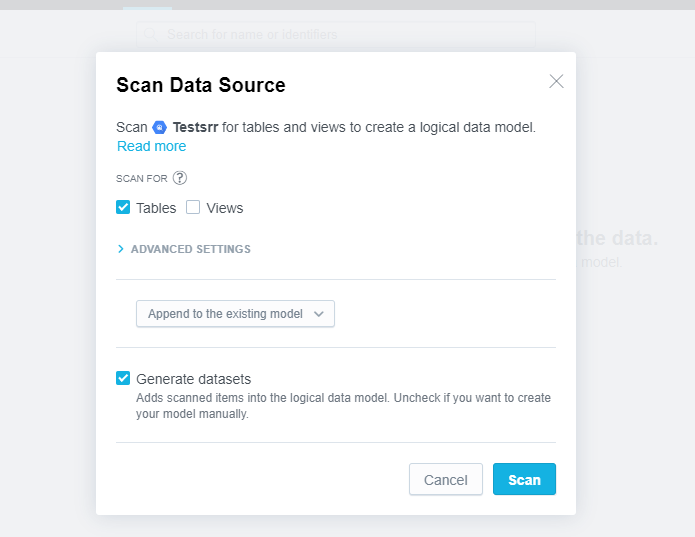

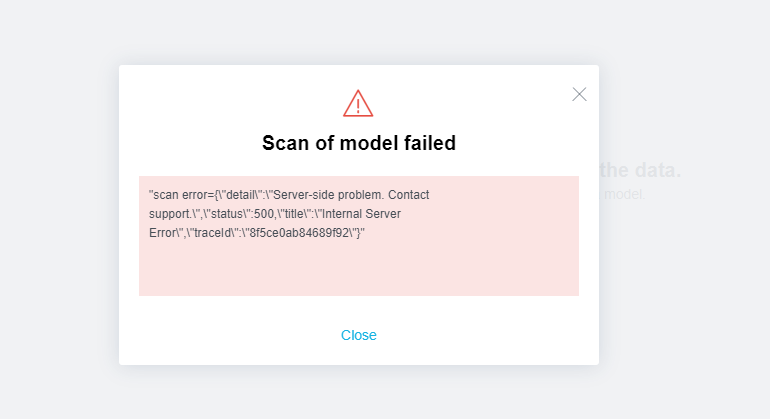

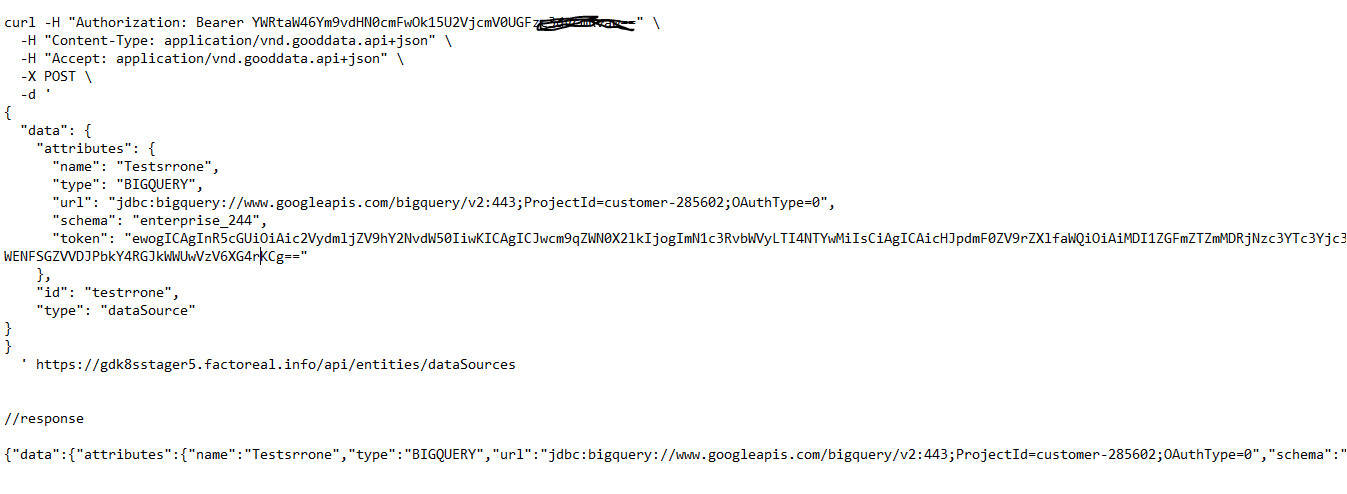

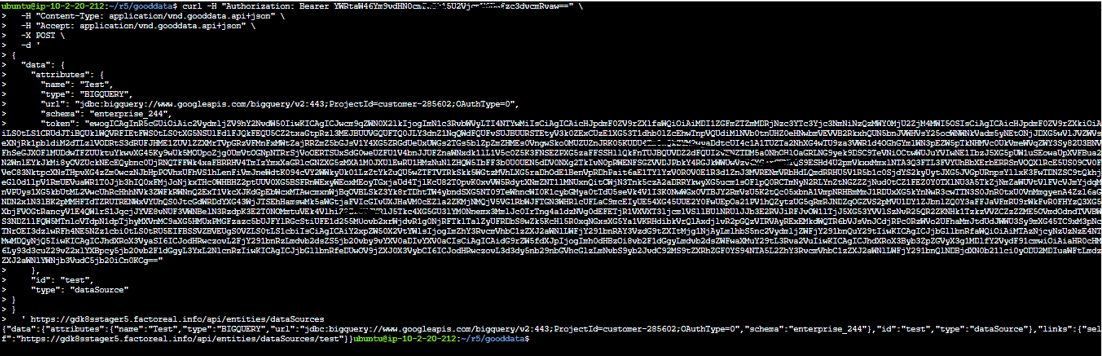

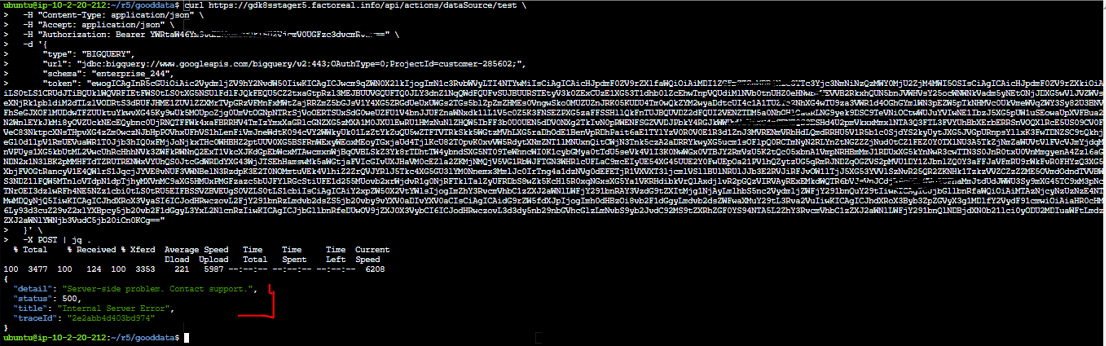

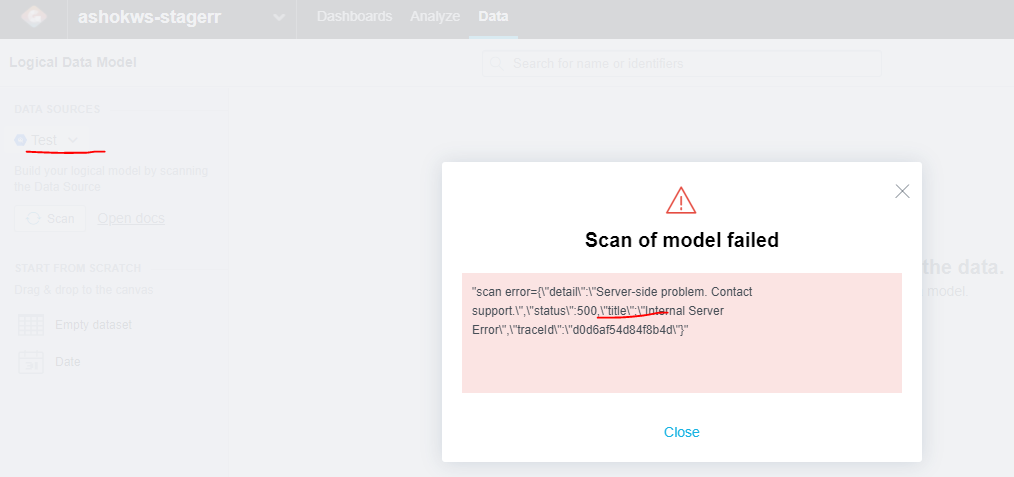

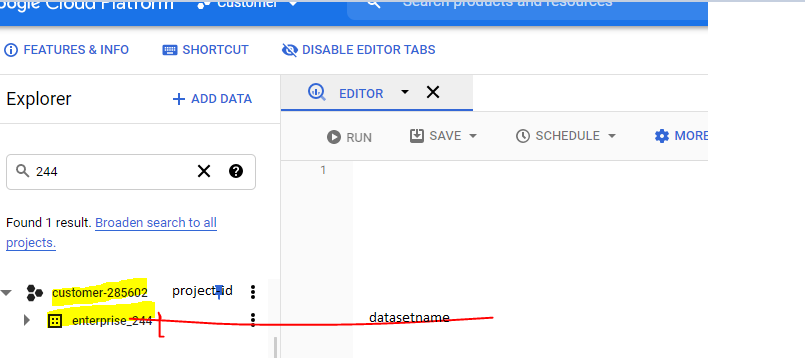

- Data Source Details after base64 -w0 service_account.json next steps to proceed further to test connection

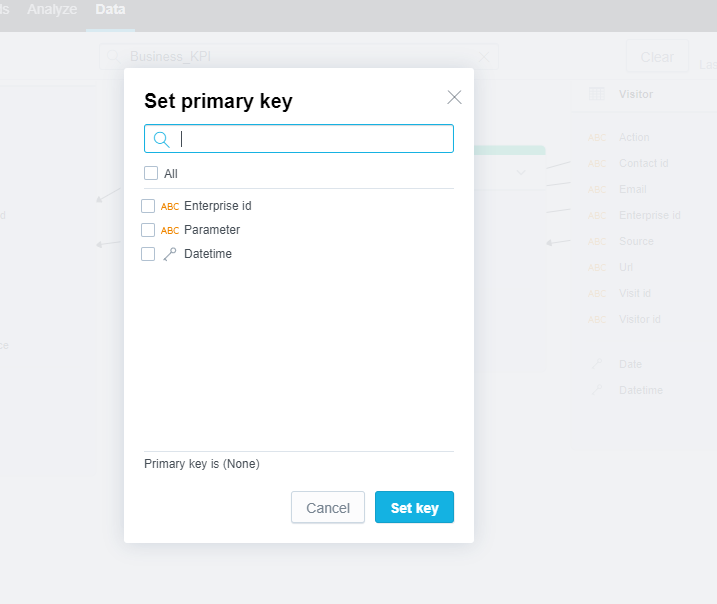

- Registered Data Source Please provide example Registered Data Source example for GBQ Dataset

steps recommended for existing GDCN 1.3 installation to make it GBQ make it work/sync

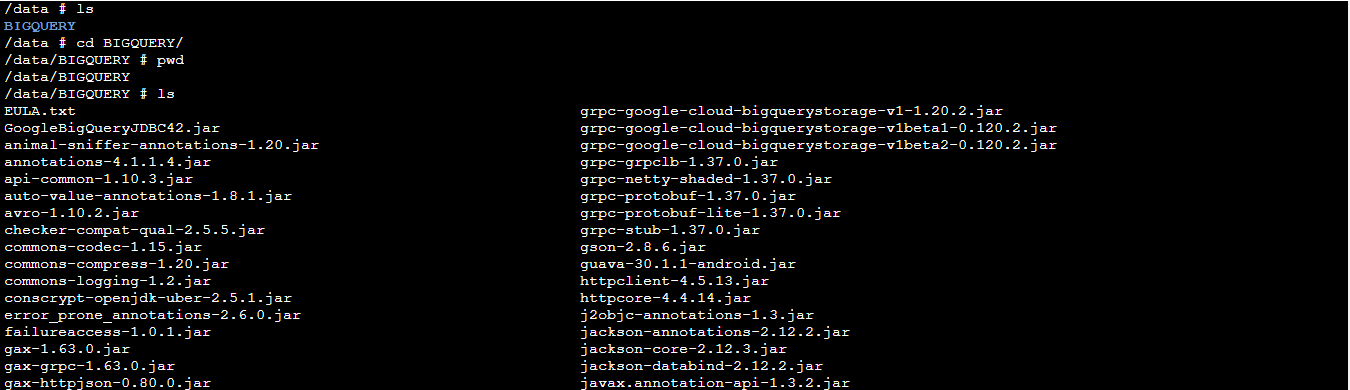

JDBC Drivers Info : SimbaJDBCDriverforGoogleBigQuery42_1.2.19.1023.zip

Thanks and Regards,

Ashok

Best answer by jacek

View original